Deepfake fraudsters impersonate FTSE chief executives

A growing number of FTSE chief executives are being impersonated by fraudsters who are using artificial intelligence to create convincing deepfake clones, The Times can reveal.

A number of these sophisticated “CEO scams” have received publicity in recent months but many more are going unreported, according to cybersecurity experts.

At least five FTSE 100 companies and one FTSE 250 company have been subject to impersonation attacks this year, although the true figure is likely to be substantially higher.

WPP, discoverIE and Octopus Energy are among the companies known to have been targeted in what are becoming increasingly personalised attacks. Other companies contacted by The Times say they have either been hit or are monitoring the potential new fraud closely.

Aiden Sinnott, a security researcher at UK-based cybersecurity company Secureworks, said: “Chief executive fraud has been huge anyway and we’re seeing it more and more with deepfakes.”

David Sancho, a senior threat researcher at Trend Micro, the cybersecurity software company, added “With the advent of video creation of decent deepfakes this is ramping up. No wonder that these criminals [are] jumping onto this trend.”

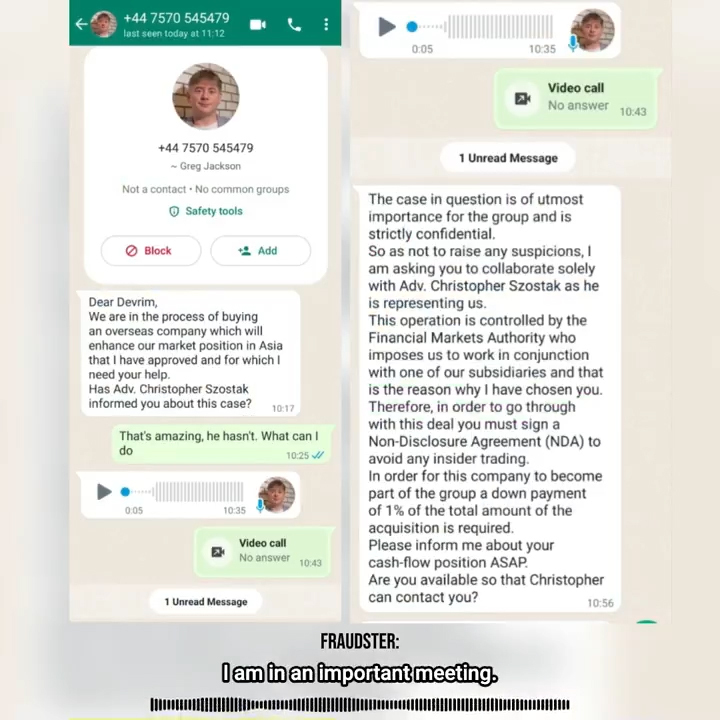

The pattern for the scam tends to start with WhatsApp messages instructing the victim to remain silent about the instructions they are about to receive, telling them a confidential acquisition is in the offing and they need to transfer money for a deal to complete.

While the phone number on WhatsApp might not be the chief executive’s, their image is used, very often the official photo from the company’s website.

The boss’s voice is then cloned and a voice note is sent, generated using easily available software, reiterating the need for secrecy and urgency for a speedy transfer of funds.

Nick Jefferies, chief executive of the electronics company discoverIE, told The Times that his voice had been faked: “I think an English person would probably have spotted that that isn’t me, because the message says ‘I will’ instead of ‘I’ll’, but we have a lot of people outside the UK, so it’s a real risk for us.”

After he was impersonated, Mark Read, WPP’s chief executive, warned staff: “You can see how these techniques are specifically designed to target individuals at a level that is far more tailored and psychological than the scams defrauding the vulnerable or general public.”

Industry experts have raised concerns that the rise in attacks is not being properly tracked. The National Fraud Intelligence Bureau, which is responsible for recording fraud cases in the UK, does not monitor impersonation scams.

However, the FBI’s Internet Crime Report for 2023 recorded almost 300,000 complaints for phishing, or spoofing, where attackers attempt to deceive individuals into providing sensitive information by pretending to be trustworthy entities, more than the top eight most-reported cybercrimes put together.

“There are a lot of possibilities for the misuse of deep fakes in all sorts of areas and the ‘CEO scam’, which is usually how people refer to this one, is a very common one,” said Sancho.

The rise in impersonation scams is being fuelled in part by growing access to powerful AI tools, with a growing number of illegitimate businesses providing voice cloning and other services directly to cybercriminals, in relatively easy-to-use formats often delivered through the messaging app Telegram.

Sancho said that he was aware of at least three AI-based tools designed solely for the purpose of this fraud that were being sold on the “dark web”.

‘The AI clone did sound like me’

When one of discoverIE’s new executives received a WhatsApp message purporting to be from an in-house lawyer, asking her to transfer half a million pounds for a company acquisition, it appeared to be genuine, if a little odd.

It was for a down payment, the messages said, of 1 per cent of the total value of the transaction.

The scammers behind the fraud attempt had clearly done their research.

Their target was the head of a foreign company recently acquired by discoverIE, so therefore relatively new to the company while, in addition, English was not her first language.

As if the messages weren’t enough, she was also sent a well-crafted message via WhatsApp that was actually an AI voice clone of discoverIE’s chief executive, Nick Jefferies.

“It does sound like me,” Jefferies said. “Whether it was AI or just edited words, I don’t know.”

Some of the language was odd. The WhatsApp messages urged the executive to keep quiet about the transfer and to sign a non-disclosure agreement because of “Financial Markets Authority” rules. No such UK body exists, but “to an overseas operating company head, who’s got no experience of UK listed businesses, they might fall for it”, Jefferies said.

What the scammers could not have foreseen was that when she received the text, Jefferies was nearby as the pair — along with a dozen or so other senior executives — had been visiting an exhibition in Germany.

It seemed odd to the individual that Jefferies would authorise such a transfer without mentioning it in person, and so she rang him to check.

“It frightened the life out of her, because she so nearly fell for it”, Jefferies said.

The FTSE 250 business is far from alone in being targeted by these artificial intelligence-based scams.

Tiago Henriques, vice-president of research for Coalition, the cyber-insurer is among those who told The Times they were seeing a rise in voice cloning deepfakes and their “efficacy”.

Greg Jackson, the chief executive of Octopus Energy, also had his voice cloned. “The ‘impersonation’ is eerily impressive”, he remarked in a post on X.

Just as in the WhatsApp messages sent to discoverIE, the Octopus perpetrator emphasised the importance of secrecy so as not to “jeopardize” the deal. The American spelling was a giveaway that something was amiss.

Four other FTSE 100 companies told The Times that they have been the targets of similar scams, however, this is likely to still be a significant under-count. Most approached did not want to comment, although many more said they were aware of the issue and were monitoring it closely.

“Companies may not have experienced the kind of WPP-levels of deep fake fraud, but I would be very surprised if they’ve not had more basic versions of it with WhatsApp impersonations using photos of senior leaders,” said Ben Aung, chief risk officer at Sage, the FTSE 100 software business.

Aung, who has spent 20 years in technology and national security roles within the government, said that such attacks were re-emerging thanks to the influence of artificial intelligence.

“I think when new opportunities come along where there’s a vulnerability in the environment that they can exploit, then you get a surge,” he said.

It is difficult to get clear information about the scale of the threat facing companies by this type of impersonation attack, either with or without AI.

The National Fraud Intelligence Bureau, which is responsible for recording fraud and some types of cybercrimes, does not monitor cases of impersonation fraud, or phishing attacks — when fraudulent communications are sent from what appears to be a reputable source — in most cases.

However, Coalition, a US-based cybersecurity insurer, said that last year insurance claims for fraudulent money transfers accounted for more than a quarter of all claims, the joint most common form of cyberattack along with email hacking.

“We’ve definitely seen changes since the generative AI wave has started,” said Henriques. “We’re not seeing novel techniques, we’re seeing them fine-tuned.”

The models and tools needed to design high-quality voice impersonations, and more recently AI-avatars, have slipped out of the hands of the companies that first introduced them.

Not only are such models not proprietary anymore, but they have been adapted and repackaged for use by criminals, often via relatively user-friendly platforms accessible through the Telegram instant messaging app and other services.

“The fact is that it’s not especially challenging from a technical perspective. You don’t need to be a malware developer. The tools to do this tend to be open source, they’re not particularly difficult to get access to,” said Secureworks’ Aiden Sinnott.

“There’s a fairly low technical barrier to entry, but the amount of money that these groups are making off it is huge.”

A report by the US-based cybersecurity company Recorded Future last year found that references to voice cloning on dark web sources had dramatically increased in recent years.

The report identified numerous voice cloning platforms, accessible primarily via Telegram, that were advertised on dark web bulletin boards alongside more reputable services such as ElevenLabs.

Oli Buckley, professor in cybersecurity at Loughborough University, said he was seeing more and more cases: “You pay £5 a month to get a good clone. You need only one or two minutes of clean audio, which is quite easy to get with a chief executive, for example you can use financial results presentations which are clear without lots of background noise. You upload a sample and then with a text to speech function, you can make them say whatever you want.”

It’s not entirely clear from where such attacks typically originate, given the relative novelty of artificial intelligence-enthused cyberattacks. However, a major group, composed of Israeli and French nationals, which made at least €38 million from chief executive fraud, was dismantled by Europol in February last year, and several other cases have involved West African cybercrime groups.

As artificial intelligence becomes cheaper and more accessible, the sophistication and popularity of such impersonation scams are likely to increase.

Post Comment